Most organizations depend on a complex set of information systems for their mission-critical functions. The risks to these systems and the information they contain are one of many concerns for management at all levels. For practical, operational reasons, most organizations will often allow for a few vulnerabilities that impact their information systems, with a plan for remediation at a later time. The risks created by these ‘accepted’ vulnerabilities are referred to as residual risks.

Organizations and their information systems can hardly afford to stagnate. Numerous changes varying in scope and scale are made to information systems continuously, and the residual risk landscape shifts accordingly. For information security professionals, the residual risk is of paramount importance. They track, report, mitigate, escalate, change, and discuss residual risks constantly. Numerous best practices, guidelines, and standards on information risk management can be applied to residual risk activities.

When accepted vulnerabilities are not resolved according to schedule, they become a matter of concern to those involved in mitigating risk. This delay exposes information systems to threats that emerge rapidly for prolonged periods. Residual risk increases in proportion to the delay in mitigation.

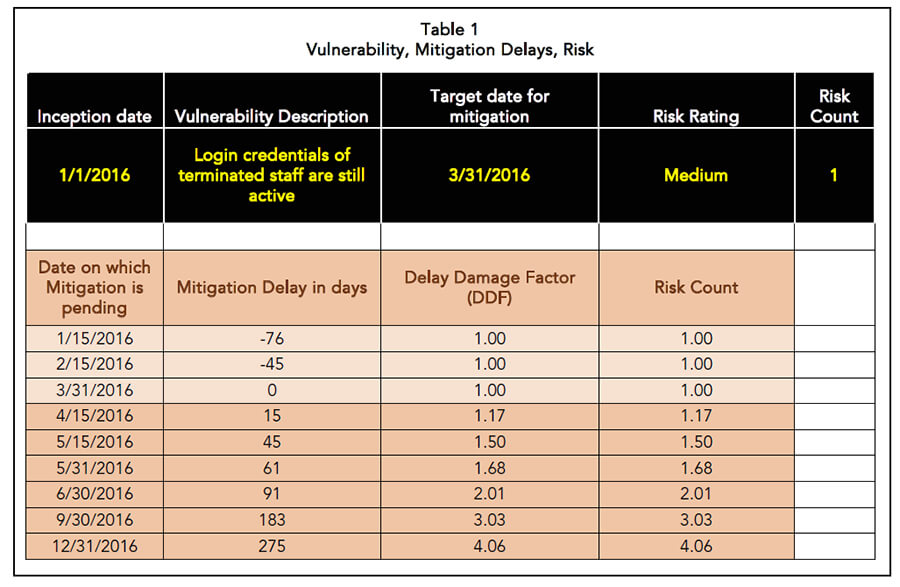

Consider a vulnerability that was identified on January 1st, resulting in a medium risk. Assume a target date of March 31st for its resolution. This looks simple enough: we now have one accepted medium risk, to be mitigated within three months.

What if this vulnerability were to remain unresolved as of April 15th? Instead of the original 90 days, it has now been 105 days since the vulnerability was identified, and it remains yet unresolved. The delay in resolving this vulnerability increases the likelihood of an exploit being developed. If we accept that the risk goes up in proportion to the delay, I propose that the risk in this hypothetical case should be treated as 105/90 times its original value.

We will refer to this date-dependent ratio as the Delay Damage Factor (DDF). In this example, the Delay Damage Factor measured on April 15th is 105/90, which is equal to 1.17. The originally computed value of one medium risk should be treated as 1.17 times the original risk. Let us further assume that this vulnerability remains unresolved on May 15th. Applying the same rule for this date-dependent ratio, its value would have increased to 135/90, which is equal to 1.5. On May 15th, the originally computed value of this medium risk should be treated as 1.5 times the original risk.

The rules for computing this Delay Damage Factor on any given day are:

- If the given day is earlier than or equal to the original estimated day of mitigation, the value of the DDF is 1.

- If the given day is after the original estimated day of mitigation, the value of the DDF is delay as of today/delay as per the original estimated day of mitigation.

This rule can also be summarized as risk increases in direct proportion to mitigation delays.

The table above elaborates further on our example, and demonstrates the use of the DDF to measure risk:

In the example, when the risk remains unmitigated on December 31st, its DDF is computed as 4.06. Mitigation delays have kept the vulnerability unresolved for nearly four times the original commitment, and the risk has grown nearly four times as well.

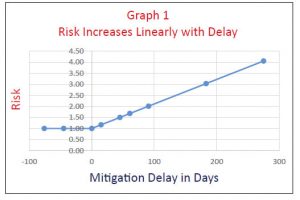

Graph 1 shows how the risk in our example (Table 1) as per our concept of DDF grows over time due to mitigation delays:

Graph 1 shows how the risk in our example (Table 1) as per our concept of DDF grows over time due to mitigation delays:

It is important at this point to mention what DDF is and what it is not:

DDF is a measure of the impact of mitigation delays on risks. It is meant to express that this risk has gone up by a certain factor.

DDF is not a measure of the absolute value of risk itself.

Now that we have a foundation for DDF, let us extend the concept further by considering whether or not there is a threshold delay after which we should assume an exponential increase for DDF computation. If we assume that our hypothetical organization has set a threshold delay of 300 days, after which it will measure DDF not in its initial ratio, but as this ratio raised to an exponential power, DDF becomes 1; then a ratio; and ultimately, a ratio raised to an exponent.

The rules for computing this threshold of the Delay Damage Factor on any given day are as follows:

- If the ‘given day’ is earlier than or equal to the ‘original estimated day of mitigation,’ the value of DDF is 1.

- If the ‘given day’ is after the ‘original estimated day of mitigation’ and less than or equal to the threshold days for delay, the value of the DDF is delay as of today/delay as per the original estimated day of mitigation.

There are other questions yet to be answered, such as:

- Is there a maximum DDF at which the risk rating itself changes? I.e., can a medium risk become a high risk after a certain delay in mitigation?

Since this article suggests that we increase the count of risks, due to delays in mitigation, it is only a logical next step to analyze the impact of extreme mitigation delays on the risk category itself. Certain threats to information systems seem to grow over time. Some classes of risks, such as the ones related to the technical family of security controls (like a weak password), are likely to be impacted more severely by delays than others. Further research focused on this question hence would have a better return on investment if it were to begin with the vulnerabilities, threats, and risks related to technical controls.

- Can we change the threshold we use for DDF based on the original risk rating itself? Can an organization have one threshold for medium risks (say, 300 days) and another one for high risks (say, 200 days)?

This question is important since organizations should have less tolerance for delays in mitigation of risks categorized as high. Typically, organizations accept high risks as a part of residual risks with significant concerns. Monitoring risks using metrics such as the DFF should demonstrate that heightened concern about mitigation delays on high risks. A lower threshold in computing DDF for high risks reflects a lower tolerance for delays in mitigating them. Selecting a lower threshold for high risks will also increase their visibility and help allocate resources for mitigation. To maintain the value of tracking risks over time using DDF-based metrics, the threshold for DDF, once set, should not change unless it is an absolute must.

- Can the average DDF computed for high risks be combined with the same for medium and low risks in order to express overall DDF for risks of all ratings?

It is inevitable that once the DDF is computed and understood, it will be used as a measure to compare how much delay in mitigation exists amongst risk groupings. For example, one organizational unit could be compared against another for delays in risk mitigation, using the DDF for the medium risk grouping. The average DDF – computed combining delays in high, medium, and low risk categories – requires further statistical analysis to avoid misrepresenting the situation of delay. A weighted average that represents the number of each risk category in the group should most likely be considered. In other words, a single high risk being delayed by six months is not the same as three high risks being delayed by four months.

Leave a Comment